Although there are many articles about this topic, I decided to document this in a post for my future self and to share with all of you the approach I usually use to separate an application depending on specific roles.

The Difference Between Active Directory and LDAP

Any hacker knows the keys to the network are in Active Directory (AD). Once a hacker has access to one of your user accounts, it’s a race against you and your data security protections to see if you can stop them before they can start a data breach.

It’s important to know Active Directory backwards and forwards in order to protect your network from unauthorized access – and that includes understanding LDAP.

What is LDAP?

LDAP (Lightweight Directory Access Protocol) is an open and cross platform protocol used for directory services authentication.

LDAP provides the communication language that applications use to communicate with other directory services servers. Directory services store the users, passwords, and computer accounts, and share that information with other entities on the network.

What is Active Directory?

Active Directory is a directory services implementation that provides all sorts of functionality like authentication, group and user management, policy administration and more.

Active Directory (AD) supports both Kerberos and LDAP – Microsoft AD is by far the most common directory services system in use today. AD provides Single-SignOn (SSO) and works well in the office and over VPN. AD and Kerberos are not cross platform, which is one of the reasons companies are implementing access management software to manage logins from many different devices and platforms in a single place. AD does support LDAP, which means it can still be part of your overall access management scheme.

Active Directory is just one example of a directory service that supports LDAP. There are other flavors, too: Red Hat Directory Service, OpenLDAP, Apache Directory Server, and more.

LDAP vs. Active Directory

LDAP is a way of speaking to Active Directory.

LDAP is a protocol that many different directory services and access management solutions can understand.

The relationship between AD and LDAP is much like the relationship between Apache and HTTP:

- HTTP is a web protocol.

- Apache is a web server that uses the HTTP protocol.

- LDAP is a directory services protocol.

- Active Directory is a directory server that uses the LDAP protocol.

Occasionally you’ll hear someone say, “We don’t have Active Directory, but we have LDAP.” What they probably mean is that they have another product, such as OpenLDAP, which is an LDAP server.

It’s kind of like someone saying “We have HTTP” when they really meant “We have an Apache web server.”

What is LDAP Authentication?

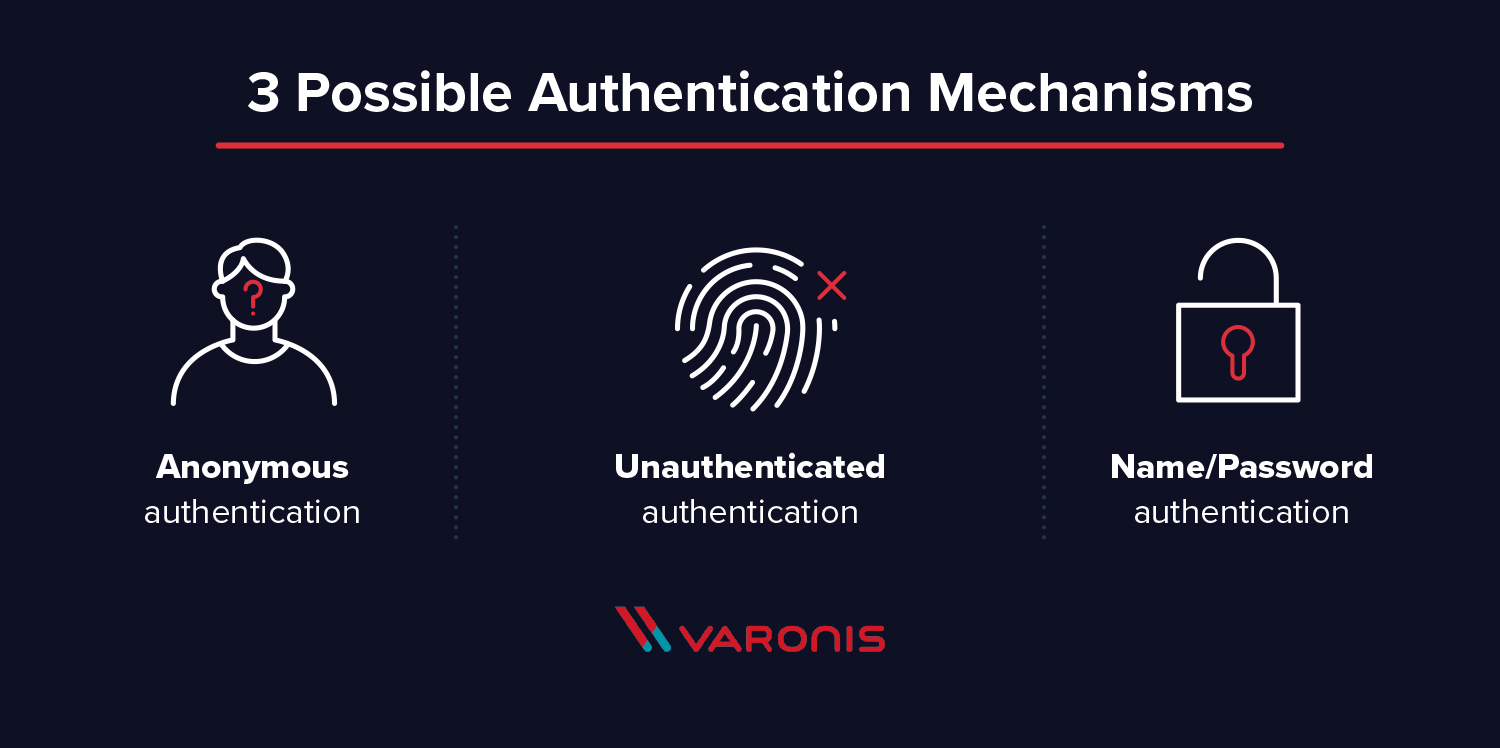

There are two options for LDAP authentication in LDAP v3 – simple and SASL (Simple Authentication and Security Layer).

Simple authentication allows for three possible authentication mechanisms:

- Anonymous authentication: Grants client anonymous status to LDAP.

- Unauthenticated authentication: For logging purposes only, should not grant access to a client.

- Name/Password authentication: Grants access to the server based on the credentials supplied – simple user/pass authentication is not secure and is not suitable for authentication without confidentiality protection.

SASL authentication binds the LDAP server to another authentication mechanism, like Kerberos. The LDAP server uses the LDAP protocol to send an LDAP message to the other authorization service. That initiates a series of challenge response messages that result in either a successful authentication or a failure to authenticate.

It’s important to note that LDAP passes all of those messages in clear text by default, so anyone with a network sniffer can read the packets. You need to add TLS encryption or similar to keep your usernames and passwords safe.

What is an LDAP Query?

An LDAP query is a command that asks a directory service for some information. For instance, if you’d like to see which groups a particular user is a part of, you’d submit a query that looks like this:

(&(objectClass=user)(sAMAccountName=yourUserName)

(memberof=CN=YourGroup,OU=Users,DC=YourDomain,DC=com))

Beautiful syntax, huh? Not quite as simple as typing a web address into your browser. Feels like LISP.

Luckily, in most cases, you won’t need to write LDAP queries. To maintain your sanity, you’ll perform all your directory services tasks through a point-and-click management interface like Varonis DatAdvantage or perhaps using a command line shell like PowerShell that abstracts away the details of the raw LDAP protocol.

TL;DR: LDAP is a protocol, and Active Directory is a server. LDAP authenticates Active Directory – it’s a set of guidelines to send and receive information (like usernames and passwords) to Active Directory. Want to learn more? Get a 1:1 AD demo and learn how Varonis helps protect your Active Directory environment.

Setting up Google Directory Sync with OpenLDAP

I’ll be adding updates to my new blog here: https://blog.salrashid.me/

Introduction

Tutorial on how to provision users and groups from a local LDAP server (OpenLDAP) into your G-suites domain. Any users and groups present in your local LDAP server will get created in G-suites. Once your users are present in your G-suites domain, you can authorize these users and groups access to Google Cloud Resources and other G-suites features.

This article is simply a tutorial on the simplified steps you would take for your on-prem directory server (ActiveDirectory, OpenLDAP). The Directory Sync utility overwrites any existing G-suites users and groups in favor of your local LDAP. As this is just a tutorial, only execute the ‘dry-run/simulate’ capabilities unless you are absolutely sure. You will need Domain Admin user privileges to your G-suites domain.

This sample will only sync the basic Users and Groups objects from your LDAP to G-suites.

Some references on the Directory Sync tool:

- https://support.google.com/a/answer/106368?hl=en

- https://support.google.com/a/answer/6162412?hl=en

- https://support.google.com/a/topic/2679497

- https://support.google.com/a/answer/6258071?hl=en

If you are a Google Cloud Platform user, consider migrating your organization after you have setup Directory Sync

- https://cloud.google.com/iam/docs/faq#how_do_i_use_google_groups_with_iam

- https://cloud.google.com/resource-manager/docs/overview

This article is a copy of my github page.

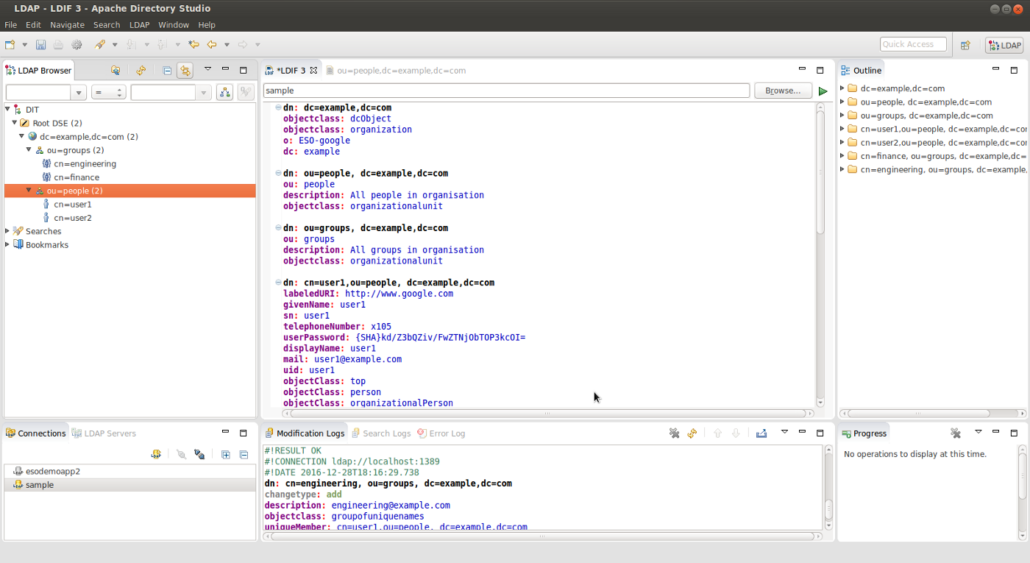

OpenLDAP configuration

This tutorial run a Docker container with a configurable OpenLDAP server that you can setup and load sample data reflecting your LDAP hierarchy. The the sample LDIF file is very basic and enables the domain dc=example, dc=com with users under ou=users and groups under ou=groups

You can edit the slapd.conf file and import.ldif file to map to your users and directory structure. You will need to initialize and load the LDIF files once the container starts up as shown below

** NOTE: I’ve made some specific modifications to the objectclass mappings for a users groups display name for simplicity **

Download the sample Dockerfile and LDAP configuration

- Dockerfile Initializes a plain OpenLDAP server on secure (ldaps://) and insecure (ldap://) ports.

- slapd.conf OpenLDAP configuration that defines the root password, baseDN and ACLs to apply

- import.ldif Sample users and groups

- CA_crt.pem, ldap_crt.pem, ldap_key.pem Self-signed certificate and chain for ldaps://

Start the LDAP server

The first step is to setup the local LDAP server. You will need to clone the gitrepo to acquire the sample Dockerfile and ldap configurations.

Build the container

docker build -t myldap .

Start the container

docker run -p 1389:389 -p 1636:636 myldap slapd -h "ldap://0.0.0.0:389 ldaps://0.0.0.0:636" -d 3 -f /ldap/slapd.conf

Install LDAP utilities on the host

Either: Install some LDAP utilities you will need on the docker host

apt-get install ldap-utils

Alternatively, you can install an LDAP UI like Apache Directory Studio.

Initialize your LDAP server

Load the sample data

ldapadd -v -x -D "cn=admin,dc=example,dc=com" -w mypassword -H ldap://localhost:1389 -f import.ldif

If you used Apache Directory Studio, you can load and execute the .ldif file directly (“LDAP →New LDIF FIle”) after you establish a connection:

Verify via query

ldapsearch -v -x -D "cn=admin,dc=example,dc=com" -w mypassword -b "ou=people,dc=example,dc=com" -H ldap://localhost:1389

If you use Directory Studio, you can browse the imported LDAP structure in the console directly.

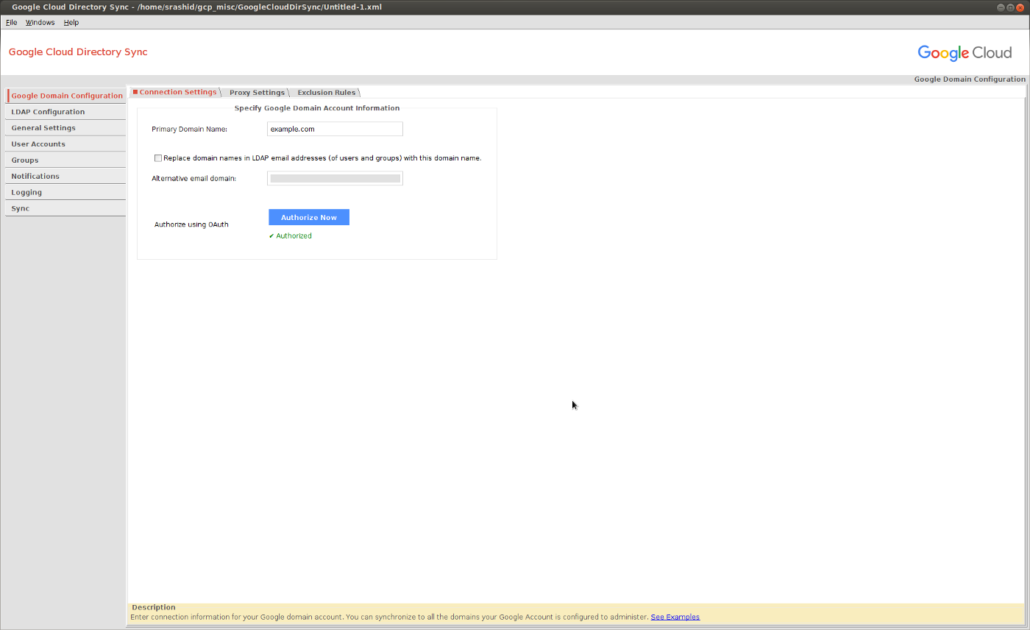

Setup dry-run Google Directory Sync

Once the LDAP server is running, we need to run the Directory Sync utility.

Again only run the Directory Sync in dry-run mode!!

Download and Start the Directory Sync utility Download: https://support.google.com/a/answer/6120989 Launch:

$ GoogleCloudDirSync/config-manager

Setup the Google Domain Configuration

You need to be domain super user to syn and run this utility:

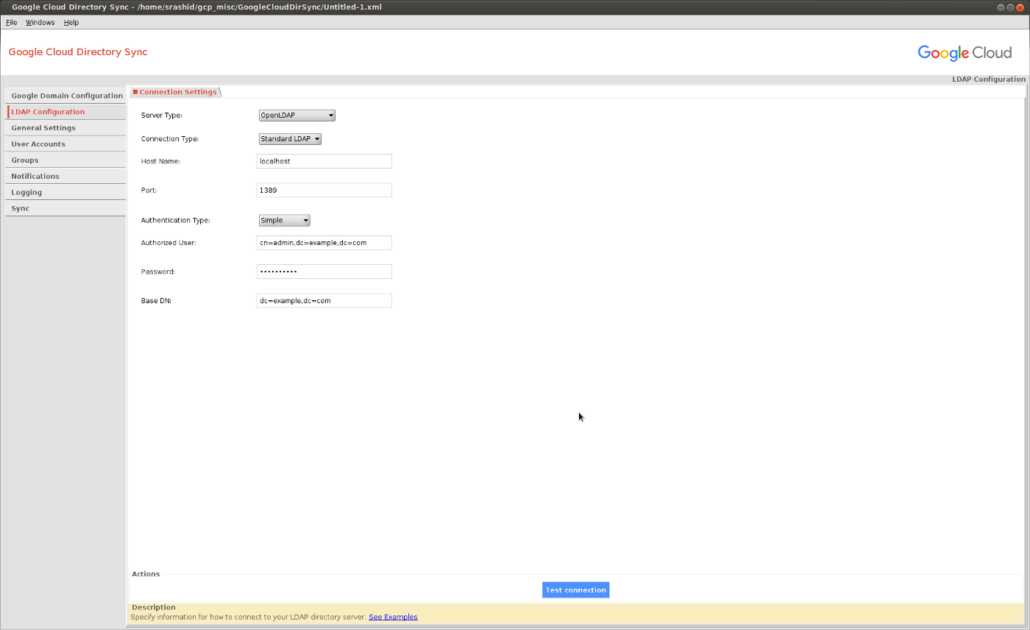

Connect to the LDAP server

Connect as cn=admin,dc=example,dc=com. The default password is mypassword

If you are using ldaps://, you need to add in the certificate chain first:

cd GoogleCloudDirSync/jre

$ keytool -keystore lib/security/cacerts -storepass changeit -import -file path_to_your/ldap_crt.pem -alias mydc

$ keytool -keystore lib/security/cacerts -storepass changeit -import -file path_to_your/CA_crt.pem -alias myca

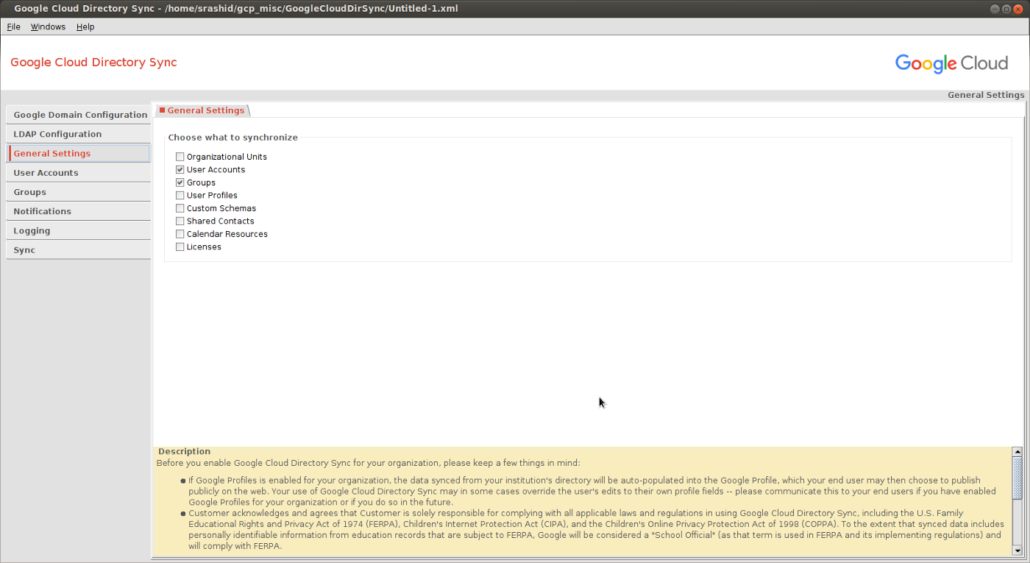

Select Users and Groups to sync

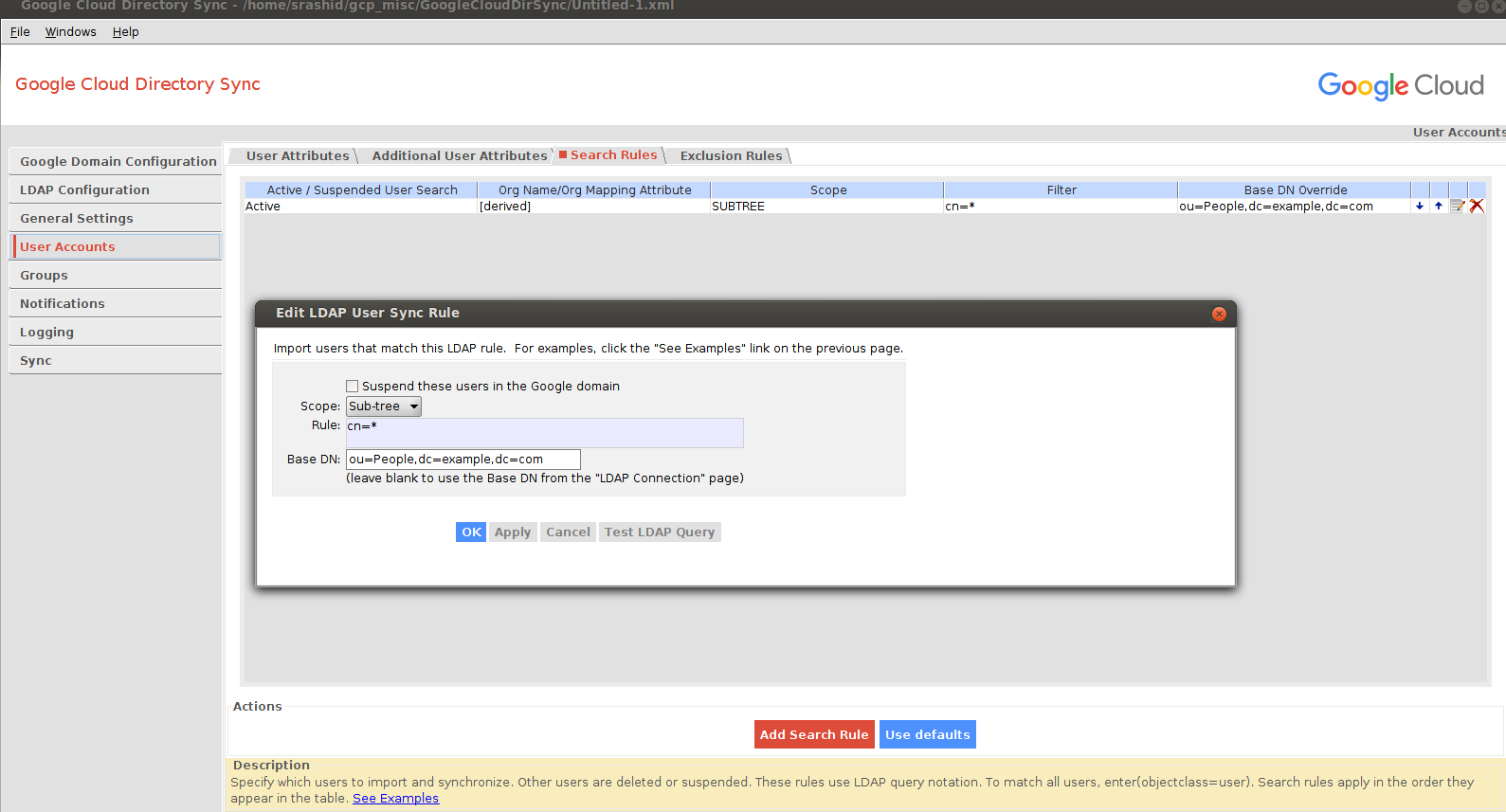

User Configuration

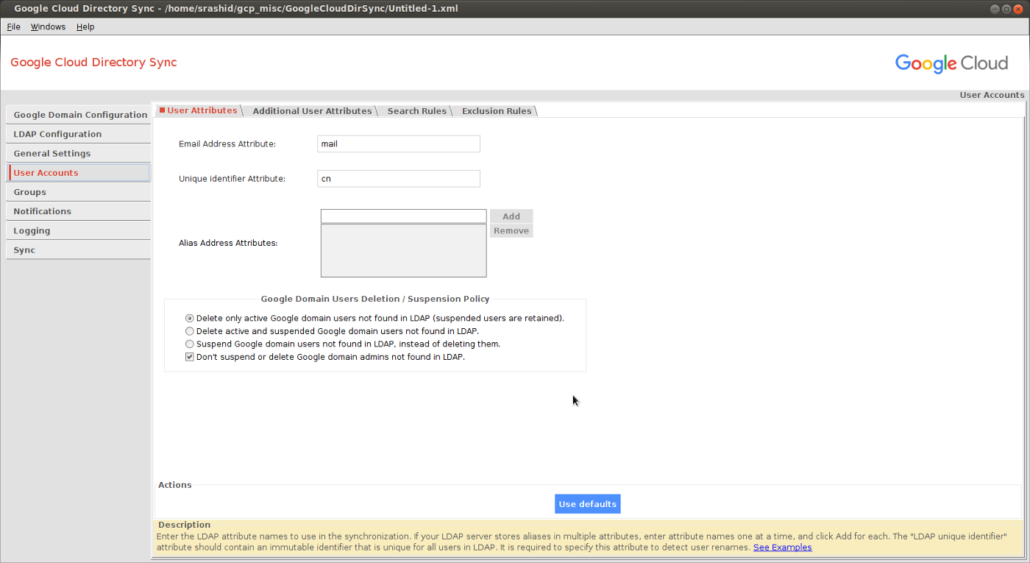

I’ve made some specific maps for LDAP attributes to G-suites attributes:

- cn -> Unique identifer attribute

- mail -> email address to use

- givenName -> Users Firstname

- sn -> Users Lastname

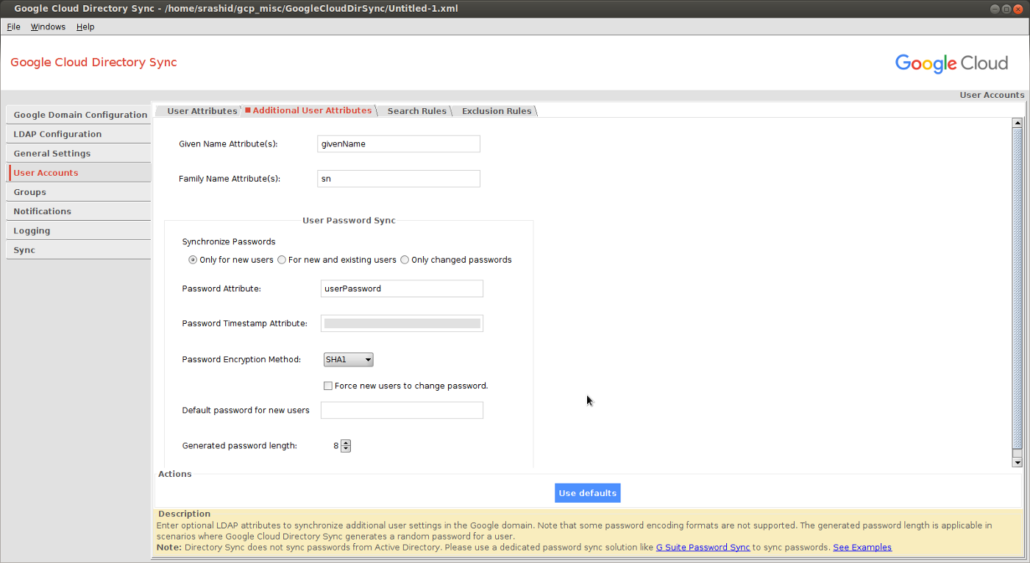

- userPassword -> SHA1 format for the users local LDAP password

The users in LDAP are found under ou=People,dc=example,dc=com and the primary identifier is cn

The SHA format for the password can be derived using sample utilities bundled with openldap:

slappasswd -h {SHA} -s mypassword

{SHA}kd/Z3bQZiv/FwZTNjObTOP3kcOI=

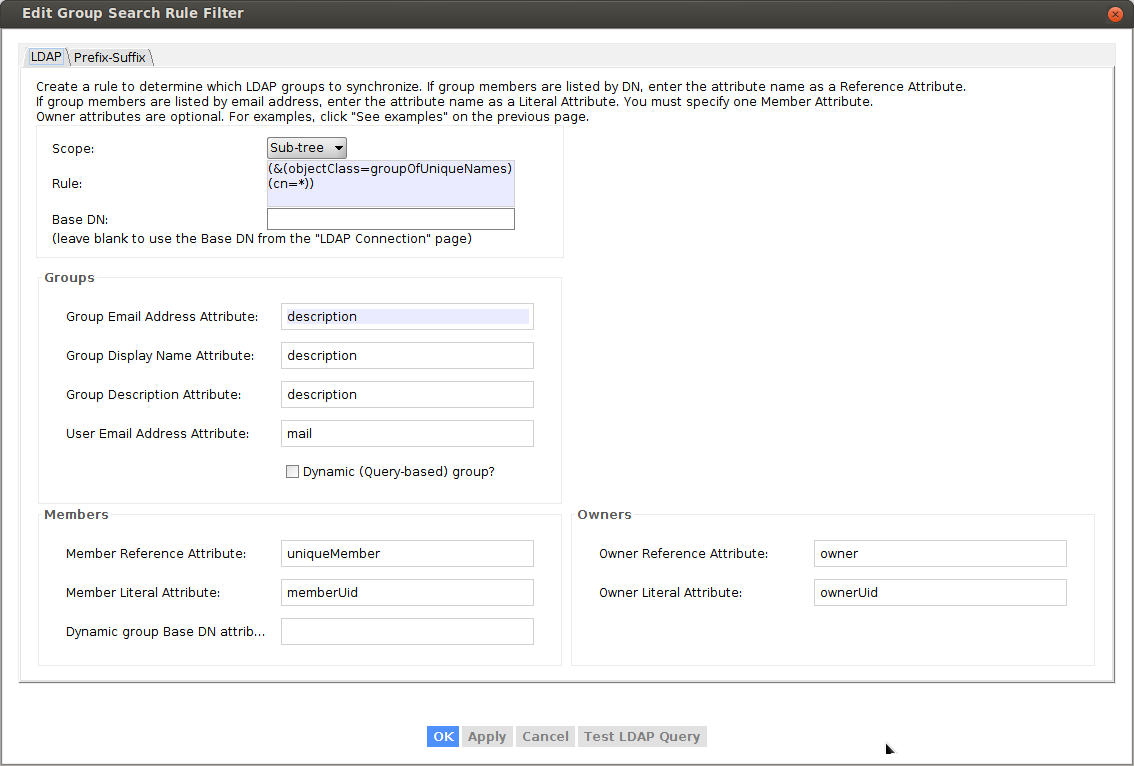

Groups Configuration

I did not want to override the default openldap schema so I ended up using the description attribute of objectclass: groupofuniquenames as the attribute the utility will use to infer the Group Email Address:

- Group Email Address Attribute: description

Meaning the LDAP’s description field for a groupofuniquenames denotes the email address to provision in G-suites.

You can search for the groups by looking in the subtree for:

(&(objectClass=groupOfUniqueNames)(cn=*))

For example:

dn: cn=engineering, ou=groups, dc=example,dc=com

cn: engineering

objectclass: groupofuniquenames

description: engineering@example.com

uniqueMember: cn=user1,ou=people, dc=example,dc=com

uniqueMember: cn=user2,ou=people, dc=example,dc=com

To verify, select “Test Query” button:

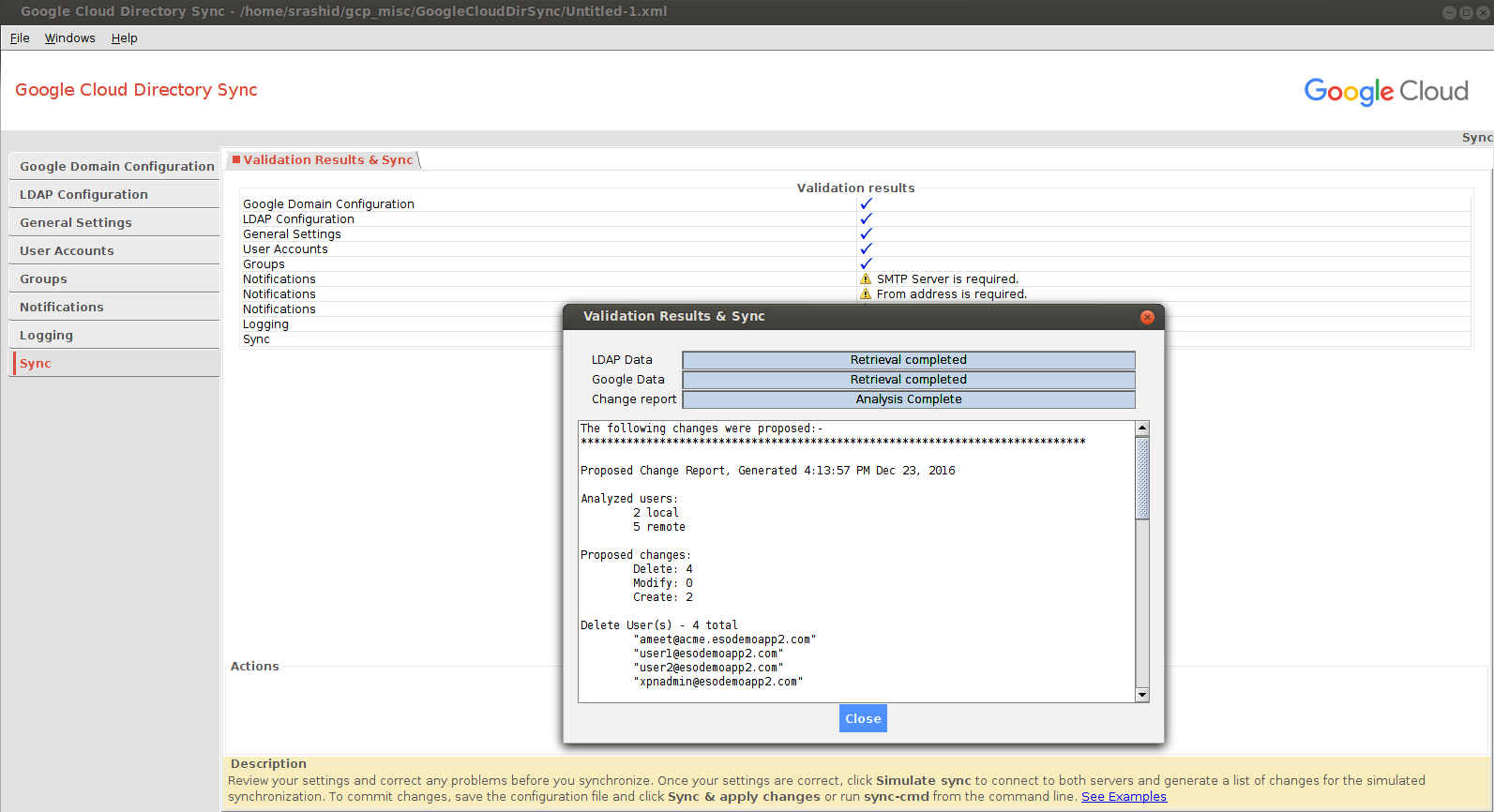

Execute Dry-Run Sync

Now that you are all setup, click the ‘Simulate sync’ button to see what would happen.

REMEMBER TO SELECT “SIMULATE SYNC”

If had existing users already in my apps domain and I tried to import new ones, the reconciliation favored the local LDAP (meaning it would add local ldap and delete existing accounts~)

Execute Sync

Only execute a full sync if you are absolutely sure this is what you want to do!!

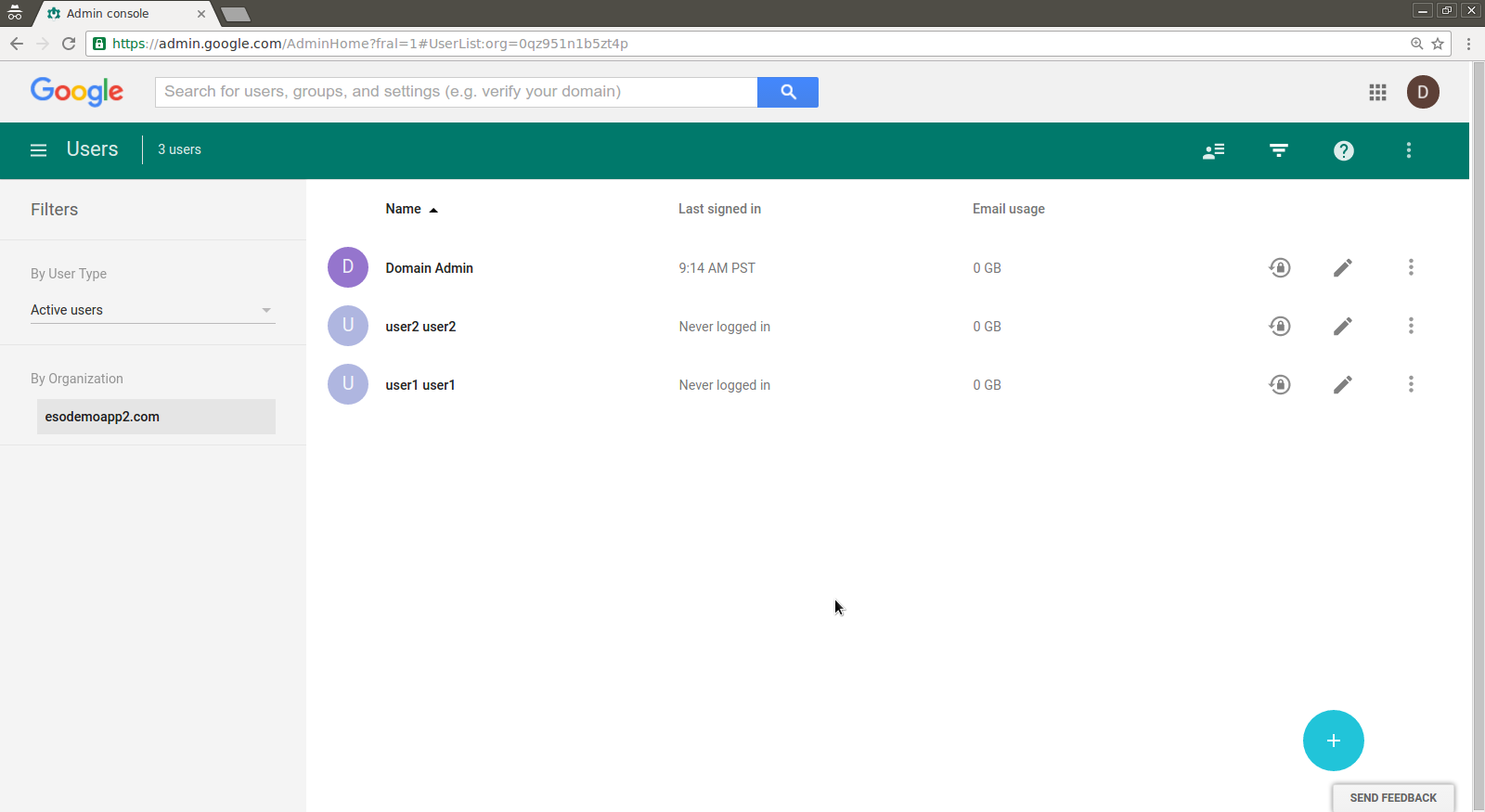

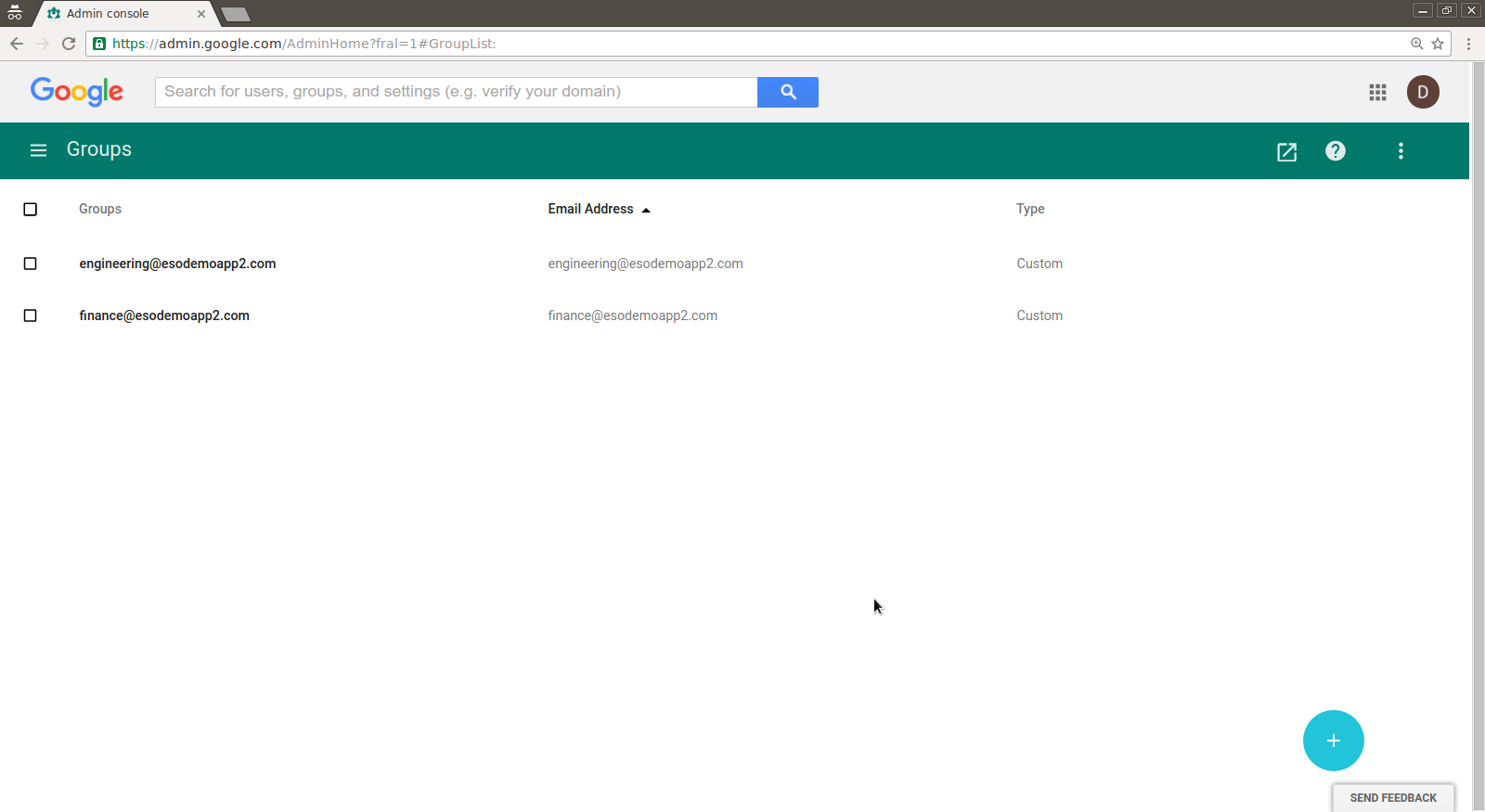

If you are confident on the sync setup, you can initiate the full synchronization. Once the users and groups are committed, you can see them in the Google Apps domain console.

Note, the setup does not sync or overwrite the domain admin users.

You can also backup/export your existing users list first to a .csv file prior to running the full sync.

The following changes were applied on the Google domain:-

*****************************************************************************Change Status Report, Generated 10:09:17 AM Dec 28, 2016

Successful user changes:

Deleted: 0

Modified: 0

Created: 2Failures:

Delete: 0

Modify: 0

Create: 0Created 2 new users

User: "user1@example.com"

Local key "dXNlcjE"

Given name "user1"

Family name "user1"

Set SHA-1 password hashUser: "user2@example.com"

Local key "dXNlcjI"

Given name "user2"

Family name "user2"

Set SHA-1 password hash

Successful group changes:

Deleted: 0

Modified: 2

Created: 2Failures:

Delete: 0

Modify: 0

Create: 0Successfully modified 2 groups

Group: "finance@example.com"

Added user user1@example.comGroup: "engineering@example.com"

Added user user1@example.com

Added user user2@example.com

Created 2 new groups

Group: "engineering@example.com"

Group: "finance@example.com"The following changes were proposed:-

*****************************************************************************Proposed Change Report, Generated 10:09:16 AM Dec 28, 2016Analyzed users:

2 local

1 remoteProposed changes:

Delete: 0

Modify: 0

Create: 2Create - 2 total

New user 1: "user1@example.com"

Non-address primary key "dXNlcjE"

Given name "user1"

Family name "user1"

SHA1 password

0 aliasesNew user 2: "user2@example.com"

Non-address primary key "dXNlcjI"

Given name "user2"

Family name "user2"

SHA1 password

0 aliasesAnalyzed groups:

2 local

0 remoteProposed changes:

Delete: 0

Modify: 2

Create: 2

Create Group(s) - 2 total

"engineering@example.com"

"finance@example.com"

Modify (all proposed changes) - 2 total groups affected

Modify group 1: "engineering@example.com"

Add address "user1@example.com"

Add address "user2@example.com"Modify group 2: "finance@example.com"

Add address "user1@example.com"

Directory Sync via Admin API

You can also script the provisioning and management of users and groups via the G-suites APIs such as Directory API

#!/usr/bin/pythonfrom apiclient.discovery import build

import httplib2

from oauth2client.service_account import ServiceAccountCredentials

from oauth2client.client import GoogleCredentials

import logging

import json

import sys

from apiclient import discovery

import oauth2client

from oauth2client import client

from oauth2client import toolsscope = 'https://www.googleapis.com/auth/admin.directory.user'

credentials = ServiceAccountCredentials.from_p12_keyfile('adminapi@fabled-ray-104117.iam.gserviceaccount.com',

'project1-5fc7d442817b.p12',

scopes=scope)

credentials = credentials.create_delegated('admin@example.com')

http = httplib2.Http()

http = credentials.authorize(http)

service = discovery.build('admin', 'directory_v1', http=http)

results = service.users().list(customer='C023zw2x7', domain='example.com').execute()

users = results.get('users', [])

print json.dumps(users, sort_keys=True, indent=4)

for u in users:

print json.dumps(u['primaryEmail'], sort_keys=True, indent=4)

Testing Laravel Password Resets

Testing is an important yet often overlooked aspect of building successful Laravel applications. This article will provide an introduction to testing applications written using the Laravel Framework.

For our purposes we’ll be writing feature tests that make HTTP requests to our application and then make assertions about the responses and the state of the application’s database after the request is complete. We will make minimal changes to the authentication scaffolding provided by Laravel and focus on testing the Password Reset feature.

Getting Started

Assuming you are familiar with setting up a new Laravel project, use your terminal and the Laravel installer to create a new project.

If you aren’t familiar with setting up a development environment for a new Laravel application I encourage you to check out the documentation on installation and the Vagrant box Homestead.

Create a new Laravel application in the directory password-reset-testing.

$ laravel new password-reset-testing

Once composer has finished installing everything, change your working directory to that of the new project.

$ cd password-reset-testing/

Next use Artisan to generate the authentication scaffolding for our application.

$ php artisan make:auth

Again using Artisan, run the database migrations to create the users and password_resets tables.

$ php artisan migrate

Naming Each Route

As a best practice, each of our application’s routes should have a name. By using route names and the route helper function instead of hard-coding routes, the URI of a route can be easily changed in the future.

Open up routes/web.php and change the contents to match below.

<?php

// Welcome Route

Route::get('/', function () {

return view('welcome');

})->name('welcome');

// Authentication Routes

Route::get('login', 'Auth\LoginController@showLoginForm')

->name('login');

Route::post('login', 'Auth\LoginController@login')

->name('login.submit');

Route::post('logout', 'Auth\LoginController@logout')

->name('logout');

// Registration Routes

Route::get('register',

'Auth\RegisterController@showRegistrationForm')

->name('register');

Route::post('register',

'Auth\RegisterController@register')

->name('register.submit');

// Password Reset Routes

Route::get('password/reset',

'Auth\ForgotPasswordController@showLinkRequestForm')

->name('password.request');

Route::post('password/email',

'Auth\ForgotPasswordController@sendResetLinkEmail')

->name('password.email');

Route::get('password/reset/{token}',

'Auth\ResetPasswordController@showResetForm')

->name('password.reset');

Route::post('password/reset',

'Auth\ResetPasswordController@reset')

->name('password.reset.submit');

// Home Route

Route::get('/home', 'HomeController@index')

->name('home');

Note that we didn’t change any of the routes provided by the original Auth::routes() statement, we simply rewrote them to include names for every route.

Editing the Base Test Case

Before we write our tests, let’s quickly edit the base test case. Open up the file at tests/TestCase.php and edit the contents to match below.

<?php

namespace Tests;

use Illuminate\Foundation\Testing\DatabaseTransactions;

use Notification;

use Illuminate\Foundation\Testing\TestCase as BaseTestCase;

abstract class TestCase extends BaseTestCase

{

use CreatesApplication;

use DatabaseTransactions;

/**

* Set up the test case.

*/

protected function setUp()

{

parent::setUp();

Notification::fake();

}

}

First we import the Illuminate\Foundation\Testing\DatabaseTransactions trait and the Notification facade.

The statement use DatabaseTransactions at the top of the class tells Laravel to create a database transaction before each test and roll back the transaction after each test. This will keep our tests from affecting the state of our database; the database will be in the same starting state for each test.

We override the setUp method which is called before running each test. In this method we first call the parent setUp method then call fake on the Notification facade. This will fake all notifications sent out during any of our tests. Within each test we can then use another method on the Notification facade to assert a notification would have been sent to the correct destination.

Creating the Test Class

Use artisan to generate a new feature test called PasswordResetTest.

$ php artisan make:test PasswordResetTest

Open the new file at tests/Feature/PasswordResetTest.php and edit the contents to match below.

<?php

namespace Tests\Feature;

use App\User;

use Hash;

use Illuminate\Auth\Notifications\ResetPassword;

use Illuminate\Foundation\Testing\WithFaker;

use Notification;

use Password;

use Tests\TestCase;

class PasswordResetTest extends TestCase

{

use WithFaker;

const ROUTE_PASSWORD_EMAIL = 'password.email';

const ROUTE_PASSWORD_REQUEST = 'password.request';

const ROUTE_PASSWORD_RESET = 'password.reset';

const ROUTE_PASSWORD_RESET_SUBMIT = 'password.reset.submit';

const USER_ORIGINAL_PASSWORD = 'secret';

}

Here we’ve added import statements for the model App\User, the facades Hash, Notification, and Password, and the notification Illuminate\Auth\Notifications\ResetPassword. We’ve also added an import statement for the trait Illuminate\Foundation\Testing\WithFaker which conveniently instantiates a Faker factory for us for use within our tests. We simply specify our class is using the WithFaker trait and each test case will have an instance of a Faker factory at $this->faker.

Within our class we replaced the example test case with a statement specifying we’re using the WithFaker trait, constants for each route name we’ll be using, and a constant for the password test users will have.

Writing Test Cases

We will write tests for the following cases:

- Showing the password reset request page

- Submitting the password reset request page with an invalid email address

- Submitting the password reset request page with an email address not in use

- Submitting the password reset request page with a valid email address in use

- Showing the reset password page

- Submitting the reset password page with an invalid email address

- Submitting the reset password page with an email address not in use

- Submitting the reset password page with a valid email address in use and a password that does not match the password confirmation

- Submitting the reset password page with a valid email address in use and a password that isn’t long enough

- Submitting the reset password page with a valid email address in use and a valid password matching the password confirmation

After each new test, feel free to run PHPUnit using your terminal.

$ ./vendor/bin/phpunit

Testing Showing the Password Reset Request Page

Now it’s time to write our first test! Edit the PasswordResetTest class by adding the method below. By convention each test case method starts with test which is then recognized by PHPUnit.

/**

* Testing showing the password reset request page.

*/

public function testShowPasswordResetRequestPage()

{

$this

->get(route(self::ROUTE_PASSWORD_REQUEST))

->assertSuccessful()

->assertSee('Reset Password')

->assertSee('E-Mail Address')

->assertSee('Send Password Reset Link');

}

In this test case we use the method get to make a GET request to the specified URI. We generate the URI using the route helper method and the name of our route, which is stored in a constant. The assertSuccessful method asserts the response has a 200 level status code. Next we use the assertSee method to check for the presence of the text Reset Password, E-Mail Address, and Send Password Reset Link.

Testing Submitting the Password Reset Request Page

Our next few tests will be testing submitting the password reset request page with various inputs.

Add the next test shown below which tests submitting a password reset request with an invalid email address.

/**

* Testing submitting the password reset request with an invalid

* email address.

*/

public function testSubmitPasswordResetRequestInvalidEmail()

{

$this

->followingRedirects()

->from(route(self::ROUTE_PASSWORD_REQUEST))

->post(route(self::ROUTE_PASSWORD_EMAIL), [

'email' => str_random(),

])

->assertSuccessful()

->assertSee(__('validation.email', [

'attribute' => 'email',

]));

}

When a request fails validation, Laravel will return a redirect to the location the request came from with validation error messages flashed to the session. To make assertions on the response the user will see, therefore, we need to follow redirects with the followingRedirects method. We also specify a location we’re making the request from using the from method.

Next we use the post method to issue a POST request to the password.email route (again using the route helper and a previously defined constant) with data specifying the email key as a random string (using the str_random helper method).

We assert the response is successful and check for the presence of a validation message. The __ helper method is used to format the validation message using localization files. validation.email specifies the file resources/lang/{locale}/validation.php and the email array key, where {locale} is the application’s configured locale. The :attribute parameter in the string The :attribute must be a valid email address. will be replaced by the string email as specified by the associative array passed as the second argument to the __ method.

Next we’ll be testing submitting the password reset request page with a valid email address that is not in use by any user of the application.

Add the test shown below.

/**

* Testing submitting the password reset request with an email

* address not in the database.

*/

public function testSubmitPasswordResetRequestEmailNotFound()

{

$this

->followingRedirects()

->from(route(self::ROUTE_PASSWORD_REQUEST))

->post(route(self::ROUTE_PASSWORD_EMAIL), [

'email' => $this->faker->unique()->safeEmail,

])

->assertSuccessful()

->assertSee(e(__('passwords.user')));

}

Again we follow redirects and set the location where our request should originate from, but this time we use Faker to generate an email address that is not in use by anyone in the world (as the domains are example.com, example.net, and example.org). We use the unique method to ensure the email address returned has not been previously returned by Faker.

We assert the response is successful and check for the presence of the validation error message specified by the user key in the associative array in the file resources/lang/{locale}/passwords.php. This time the validation message contains a reserved HTML character, ', so we must use the e helper method to replace the character with it’s corresponding character entity.

Finally it’s time to test successfully submitting the password reset request page with a valid email address present in our application’s database.

Add the test shown below.

/**

* Testing submitting a password reset request.

*/

public function testSubmitPasswordResetRequest()

{

$user = factory(User::class)->create();

$this

->followingRedirects()

->from(route(self::ROUTE_PASSWORD_REQUEST))

->post(route(self::ROUTE_PASSWORD_EMAIL), [

'email' => $user->email,

])

->assertSuccessful()

->assertSee(__('passwords.sent'));

Notification::assertSentTo($user, ResetPassword::class);

}

In this test we use the factory helper method to create a new user in our database. Then we follow redirects for the response to our POST request to the password.email route. Our request specifies the created user’s email address in the email key of the payload. We assert the response is successful and check for the presence of the string We have e-mailed your password reset link!, specified with the argument passwords.sent passed to the __ helper method.

Using the Notification facade’s method assertSentTo we assert the ResetPassword notification was sent to the $user. We can pass the model stored in the variable $user directly into the assertSentTo method because our User model, by default, uses the Illuminate\Notifications\Notifiable trait. When routing emails for any model using the Notifiable trait, the email property on the model will be used by default.

Testing Showing the Password Reset Page

Next, to test showing the password reset page, add the test shown below.

/**

* Testing showing the reset password page.

*/

public function testShowPasswordResetPage()

{

$user = factory(User::class)->create();

$token = Password::broker()->createToken($user);

$this

->get(route(self::ROUTE_PASSWORD_RESET, [

'token' => $token,

]))

->assertSuccessful()

->assertSee('Reset Password')

->assertSee('E-Mail Address')

->assertSee('Password')

->assertSee('Confirm Password');

}

We again create a user using the factory helper method. Next we create a valid password reset token using the Password facade.

The value of $token is used to replace the token parameter in the password.reset route. We send a GET request to this route, assert the response is successful, and check for the presence of the text for page elements.

Testing Submitting the Password Rest Page

Next we’ll test submitting the password reset page, starting with using an invalid email address.

Continue our testing by adding the test shown below.

/**

* Testing submitting the password reset page with an invalid

* email address.

*/

public function testSubmitPasswordResetInvalidEmail()

{

$user = factory(User::class)->create([

'password' => bcrypt(self::USER_ORIGINAL_PASSWORD),

]);

$token = Password::broker()->createToken($user);

$password = str_random();

$this

->followingRedirects()

->from(route(self::ROUTE_PASSWORD_RESET, [

'token' => $token,

]))

->post(route(self::ROUTE_PASSWORD_RESET_SUBMIT), [

'token' => $token,

'email' => str_random(),

'password' => $password,

'password_confirmation' => $password,

])

->assertSuccessful()

->assertSee(__('validation.email', [

'attribute' => 'email',

]));

$user->refresh();

$this->assertFalse(Hash::check($password, $user->password));

$this->assertTrue(Hash::check(self::USER_ORIGINAL_PASSWORD,

$user->password));

}

In this test we’re again using the factory helper method to create at test user but this time we explicitly set the user’s password. To do this we use the bcrypt helper method to hash the value of our constant.

We create another password reset token and generate a random string to use as the new password for our request’s payload. Again following redirects we POST to the password.reset.submit route with a request originating from the password.reset route. A random string is used for the email address in the request payload.

After asserting the response was successful and checking for the validation.email validation message we refresh the user model and use the check method on the Hash facade to assert the user’s password has not changed.

Next we’ll test submitting the password reset page with an email address not in use by our application’s database.

Add the test shown below.

/**

* Testing submitting the password reset page with an email

* address not in the database.

*/

public function testSubmitPasswordResetEmailNotFound()

{

$user = factory(User::class)->create([

'password' => bcrypt(self::USER_ORIGINAL_PASSWORD),

]);

$token = Password::broker()->createToken($user);

$password = str_random();

$this

->followingRedirects()

->from(route(self::ROUTE_PASSWORD_RESET, [

'token' => $token,

]))

->post(route(self::ROUTE_PASSWORD_RESET_SUBMIT), [

'token' => $token,

'email' => $this->faker->unique()->safeEmail,

'password' => $password,

'password_confirmation' => $password,

])

->assertSuccessful()

->assertSee(e(__('passwords.user')));

$user->refresh();

$this->assertFalse(Hash::check($password, $user->password));

$this->assertTrue(Hash::check(self::USER_ORIGINAL_PASSWORD,

$user->password));

}

Nothing new on this test. We create a user, password reset token, and new password. Then the follow redirects, POST to the password.reset.submit route from the password.reset route using the token, a random and unique safe email, and the new password. We assert the response is successful, check for the presence of the passwords.user translated string (after swapping any html character entities in the string), refresh the user, and assert the user’s password hasn’t changed.

The next test will be testing submitting the password reset page with a password that doesn’t match the password confirmation.

Add the test shown below.

/**

* Testing submitting the password reset page with a password

* that doesn't match the password confirmation.

*/

public function testSubmitPasswordResetPasswordMismatch()

{

$user = factory(User::class)->create([

'password' => bcrypt(self::USER_ORIGINAL_PASSWORD),

]);

$token = Password::broker()->createToken($user);

$password = str_random();

$password_confirmation = str_random();

$this

->followingRedirects()

->from(route(self::ROUTE_PASSWORD_RESET, [

'token' => $token,

]))

->post(route(self::ROUTE_PASSWORD_RESET_SUBMIT), [

'token' => $token,

'email' => $user->email,

'password' => $password,

'password_confirmation' => $password_confirmation,

])

->assertSuccessful()

->assertSee(__('validation.confirmed', [

'attribute' => 'password',

]));

$user->refresh();

$this->assertFalse(Hash::check($password, $user->password));

$this->assertTrue(Hash::check(self::USER_ORIGINAL_PASSWORD,

$user->password));

}

Again nothing new on this test except we’re checking for a different validation message.

Our last invalid submission case to test for submitting the password reset page is using a new password that’s too short.

Add the test shown below.

/**

* Testing submitting the password reset page with a password

* that is not long enough.

*/

public function testSubmitPasswordResetPasswordTooShort()

{

$user = factory(User::class)->create([

'password' => bcrypt(self::USER_ORIGINAL_PASSWORD),

]);

$token = Password::broker()->createToken($user);

$password = str_random(5);

$this

->followingRedirects()

->from(route(self::ROUTE_PASSWORD_RESET, [

'token' => $token,

]))

->post(route(self::ROUTE_PASSWORD_RESET_SUBMIT), [

'token' => $token,

'email' => $user->email,

'password' => $password,

'password_confirmation' => $password,

])

->assertSuccessful()

->assertSee(__('validation.min.string', [

'attribute' => 'password',

'min' => 6,

]));

$user->refresh();

$this->assertFalse(Hash::check($password, $user->password));

$this->assertTrue(Hash::check(self::USER_ORIGINAL_PASSWORD,

$user->password));

}

This time we pass an argument 5 to the str_random helper function to specify the length of the random returned string (as opposed to the default length of 16). Another difference in this test is we’re checking for the presence of a validation message, validation.min.string, with two parameters, attribute and min.

Notice how we can use dot notation to specify a translation string in a nested array. To learn more about these validation messages and translation strings, check out the file at resources/lang/{locale}/validation.php.

Finally, it’s time to test the happy path: submitting the password reset page with a valid email address belonging to a user with a valid password reset token and a password matching the confirmation password (that isn’t too short).

Add the final test shown below.

/**

* Testing submitting the password reset page.

*/

public function testSubmitPasswordReset()

{

$user = factory(User::class)->create([

'password' => bcrypt(self::USER_ORIGINAL_PASSWORD),

]);

$token = Password::broker()->createToken($user);

$password = str_random();

$this

->followingRedirects()

->from(route(self::ROUTE_PASSWORD_RESET, [

'token' => $token,

]))

->post(route(self::ROUTE_PASSWORD_RESET_SUBMIT), [

'token' => $token,

'email' => $user->email,

'password' => $password,

'password_confirmation' => $password,

])

->assertSuccessful()

->assertSee(__('passwords.reset'));

$user->refresh();

$this->assertFalse(Hash::check(self::USER_ORIGINAL_PASSWORD,

$user->password));

$this->assertTrue(Hash::check($password, $user->password));

}

In this test we use the Hash facade to assert the user’s password has changed to the given password, thus successfully completing the password reset.

Conclusion

This concludes our testing for Laravel’s password resets. In ten short tests we were able to do things like create test users and valid password reset tokens, make HTTP requests to our application, assert the response contains desired content, and check if the user’s password has changed as a result of the request.

Laravel has provided ample testing capabilities and I strongly recommend reading the documentation for a deeper look at the possibilities.

You can view the source code for this project on GitHub.

Getting Started with Geospatial Data in Laravel

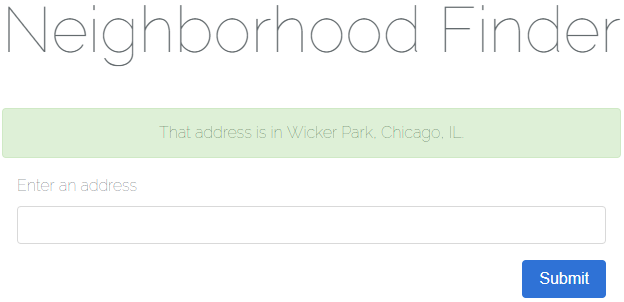

Today we’ll be learning about working with geospatial data, or data relating to geographical locations, in Laravel 5.6. As an exercise for working with this kind of data we’ll be building a simple USA Neighborhood Finder application.

There are three main learning objectives for this article:

- How to use Google’s Geocoding API to geocode an address into corresponding coordinates consisting of a longitude and latitude.

- How to import geospatial data in Well-Known Text (WKT) format into a spatial column in MySQL.

- How to determine if a point (in this case a longitude and latitude) is contained by a geometry (in this case a neighborhood boundary).

Overview

The flow of our application is as follows:

- A user enters an address and clicks “Submit”.

- The name of the neighborhood which contains the address is displayed to the user (or an error message stating no location or neighborhood could be found).

For the purposes of this article we won’t be tracking down the neighborhood boundaries of every neighborhood in the USA; instead we’ll be using three example data sets but set things up so more data sources can easily be added in the future.

You can take a look at the data sources using these links:

- Chicago, IL neighborhood boundaries

- Baltimore, MD neighborhood boundaries

- East Baton Rouge Parish, Baton Rouge, LA neighborhood boundaries

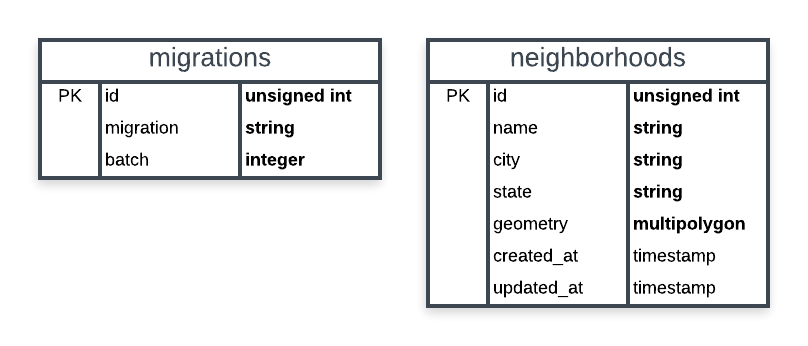

A basic schema of our application’s database is shown below. We only need two tables: Laravel’s table for migrations and a table for storing neighborhoods. Our spatial column will be named geometry and be of the multipolygon type. Think of a multipolygon as a collection of polygons. We’re using a multipolygon because a neighborhood may have more than one polygon to define its boundary. If a neighborhood uses a polygon to define its boundary we can always create multipolygon containing a single polygon (which we’ll be doing later).

In addition to the Laravel Framework, we’ll be using two more packages to build our application:

- toin0u/geocoder-laravel for using Google’s Geocoding API

- grimzy/laravel-mysql-spatial for parsing WKT geometries and determining if a multipolygon contains a point.

We will also be using Bootstrap 4 for basic styling.

Creating Our Application

For this article it’s assumed you’re comfortable using a development environment for Laravel (such as Homestead).

Generating the Project

First, let’s create a new Laravel project using the terminal.

$ laravel new neighborhood-finder

Wait for the installation to finish and change directories to the root of the project.

$ cd neighborhood-finder/

Next let’s clean up some of the things Laravel comes with that we won’t be using for our application. This includes authentication controllers, the User model/migration/factory, and the password_resets table.

- delete the

app/Http/Controllers/Authdirectory - delete

app/User.php - delete

database/factories/UserFactory.php - delete

database/migrations/*_create_users_table.php - delete

database/migrations/*_create_password_resets_table.php

Now we’re ready to create the model and migration for a Neighborhood. Note how we’re creating the model in the App\Models namespace.

$ php artisan make:model Models\\Neighborhood --migration

For our application each Neighborhood will have an (auto-incrementing) id, a name, a city, a state(abbreviation), and a geometry representing the neighborhood’s boundary as a multipolygon.

Open up the generated database/migrations/*_create_neighborhoods_table.php migration and edit the up method as shown below.

/**

* Run the migrations.

*

* @return void

*/

public function up()

{

Schema::create('neighborhoods', function (Blueprint $table) {

$table->increments('id');

$table->string('name');

$table->string('city');

$table->string('state', 2);

$table->multiPolygon('geometry');

$table->timestamps();

});

}

Installing the First Package

Now let’s install our first package using composer: grimzy/laravel-mysql-spatial. This package will allow us to easily work with spatial data types.

$ composer require "grimzy/laravel-mysql-spatial"

Let composer install the package dependencies and regenerate autoload files.

The Neighborhood Model

Our Neighborhood model will be using the SpatialTrait found in the grimzy/laravel-mysql-spatial package. The package looks for any attributes defined in the $spatialFields array on a model using this trait.

Edit app/Models/Neighborhood.php to match below. Notice how a spatial field is still eligible to be fillable through mass assignment.

<?php

namespace App\Models;

use Grimzy\LaravelMysqlSpatial\Eloquent\SpatialTrait;

use Illuminate\Database\Eloquent\Model;

class Neighborhood extends Model

{

use SpatialTrait;

/**

* The attributes that are mass-assignable.

*

* @var array

*/

protected $fillable = [

'name',

'geometry',

];

/**

* The attributes that are spatial fields.

*

* @var array

*/

protected $spatialFields = [

'geometry'

];

}

Gathering Test Data

Many cities have data portals open to the public. To seed our database with neighborhoods including geospatial data for boundaries, our application will use Comma-separated values (CSV) files exported from three of these data portals.

Create a directory at database/seeds/flat-files for a place to store the flat files.

We want to ensure none of these flat files are checked into source control, so create a .gitignore file at database/seeds/flat-files/.gitignore containing the following:

*

!.gitignore

The * entry is a wildcard telling git to ignore all files in the directory. The !.gitignore entry excludes the .gitignore file from the wildcard so it is still checked into source control.

Download the following CSV files to each location specified below.

- this CSV to

database/seeds/flat-files/chicago-illinois.csv - this CSV to

database/seeds/flat-files/baltimore-maryland.csv - this CSV to

database/seeds/flat-files/east-baton-rouge-parish-louisiana.csv

Let’s take a quick peek at what this data looks like. Open up database/seeds/flat-files/chicago-illinois.csv and notice the file contains a header row specifying the columns. The two columns we want are PRI_NEIGH (primary neighborhood) and the_geom (the geometry data).

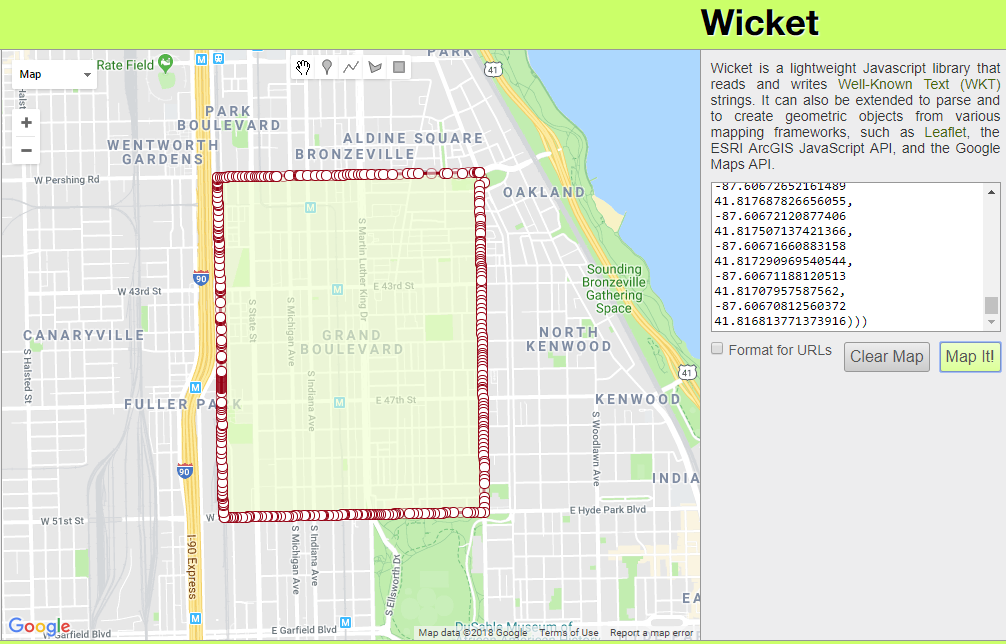

From the first row of data in the file, copy the MULTIPOLYGON(((...))) part. This is the WKT representation of the neighborhood’s boundary.

To visualize WKT data one of my favorite tools is Wicket by Arthur Endsley. Open up Wicket, paste the WKT data from your clipboard into the text box, and click “Map It!”. You’ll see the mapped multipolygon for Chicago’s neighborhood Grand Boulevard.

Creating Seeders

Now that we have our flat files and an understanding of what the data looks like let’s create some seeders.

For this exercise we’ll keep things simple with one seeder per file, each of which will extend a base class. The base class will hold logic for reading a CSV and creating a Neighborhood record. It will also contain an abstract method for transforming a geometry record into a Multipolygon. Each seeder extending the base class will implement this abstract method with logic specific to the file being processed. These seeders will also contain the run() method to be called by Laravel.

While this pattern works well for our purposes as we only have a few flat files to process, for a larger application with possibly hundreds of files I’d suggest a variation of this pattern and not defining one seeder per file.

First, let’s create our BaseNeighborhoodSeeder using artisan.

$ php artisan make:seeder BaseNeighborhoodSeeder

Update the created file at database/seeds/BaseNeighborhoodSeeder.php to match below.

<?php

use App\Models\Neighborhood;

use Grimzy\LaravelMysqlSpatial\Types\MultiPolygon;

use Illuminate\Database\Seeder;

abstract class BaseNeighborhoodSeeder extends Seeder

{

/**

* Mode for opening a file as read-only.

*/

const FILE_MODE_READ = 'r';

/**

* Parses the given geometry value into a Multipolygon.

*

* @param mixed $geometry the geometry to parse

* @return \Grimzy\LaravelMysqlSpatial\Types\MultiPolygon

*/

protected abstract function parseGeometryToMultiPolygon($geometry): MultiPolygon;

/**

* Reads all records in a flat file, parses the geometry into a multipolygon,

* and saves a Neighborhood in the database for each record.

*

* @param string $file_path path to the file to read data from

* @param int $name_index the index of the column containing the neighborhood name

* @param int $geometry_index the index of the column containing the neighborhood geometry

* @param string $city the name of the neighborhoods' city

* @param string $state the name of the neighborhoods' state

* @param bool $skip_first_row if the first row of the file should be skipped (if there's a header row)

* @param bool $use_title_case if the neighborhood names should be converted to Title Case

* @throws \Throwable

*/

protected function seedFromFlatFile(string $file_path,

int $name_index,

int $geometry_index,

string $city,

string $state,

bool $skip_first_row,

bool $use_title_case) {

// throw an exception unless a file exists at the given location

throw_unless(file_exists($file_path), new Exception("No file found at path '$file_path'"));

try {

// open the specified file at the given location

$file = fopen($file_path, self::FILE_MODE_READ);

// if the first row should be skipped, read the first row of the file

if ($skip_first_row) {

fgetcsv($file);

}

// while there's a row to be read in the file, read the next row

while ($row = fgetcsv($file)) {

// get the neighborhood name from the specified index

$name = $row[$name_index];

// if the name should be converted to Title Case, convert it

if ($use_title_case) {

$name = title_case($name);

}

// parse the geometry at the specified index into a multipolygon

$multipolygon = $this->parseGeometryToMultiPolygon($row[$geometry_index]);

// make the new neighborhood model by filling the name, city, state, and geometry

$neighborhood = new Neighborhood([

'name' => $name,

'city' => $city,

'state' => $state,

'geometry' => $multipolygon,

]);

// throw an exception unless the neighborhood could be saved

throw_unless($neighborhood->save(), new Exception("Failed to save neighborhood '$name'"));

}

} finally {

// if the file has been opened, close it

if (! empty($file)) {

fclose($file);

}

}

}

}

Here we’re defining an abstract class which cannot be instantiated; this class must be extended to be used. At the top of the class we have a constant for the file mode we’ll be using to open CSV files.

We define the abstract method parseGeometryToMulipolygon($geometry) and declare it returns a Multipolygon. This method must be implemented by any class extending BaseNeighborhoodSeeder and will contain the logic necessary for converting the geometry data in each CSV record to a Multipolygon. For our purposes this will always be parsing WKT but it could easily be parsing another format such as GeoJSON.

The seedFromFlatFile method contains parameters for the path to the file to read data from, the index of the neighborhood name column, the index of the neighborhood boundary geometry column, the name of the city for the neighborhoods, the name of the state for the neighborhoods, whether or not to skip the first row of the file (in case there is a header row), and whether or not the neighborhood name should be converted to Title Case before being saved.

In this method we first check if a file exists at $file_path using PHP’s file_exists function. If a file does not exist at the specified path we throw an exception.

Next, inside a try block, we open the file for reading using fopen with the file mode 'r'. If the $skip_first_row flag is true, we read the first row of the file using PHP’s function fgetcsv. Looping through each row, while there’s still a row left to read in the file, we use fgetcsv to parse the CSV row into an array of data.

Using the given $name_index we get the neighborhood name from the array and if $use_title_case is true we use Laravel’s helper method title_case to convert the string to Title Case. The neighborhood’s geometry is parsed into a MultiPolygon by passing the data of the geometry column into the parseGeometryToMultiPolygon method, which will be implemented by child classes.

Finally we create the new neighborhood record by passing an array of attributes to the Neighborhood model’s constructor. If the model could not be saved, an exception is thrown.

In the finally block we check if the $file variable has a value and if it does, we use fclose to close the file. Putting this logic inside the finally block ensures we close the file even if an exception is thrown.

With our base seeder class in place, we’re ready to create a seeder for each flat file. Start by creating ChicagoIllinoisNeighborhoodSeeder using artisan.

$ php artisan make:seeder ChicagoIllinoisNeighborhoodSeeder

Update the file database/seeds/ChicagoIllinoisDatabaseSeeder.php with the content below.

<?php

use Grimzy\LaravelMysqlSpatial\Types\MultiPolygon;

class ChicagoIllinoisNeighborhoodSeeder extends BaseNeighborhoodSeeder

{

/**

* Index of the name column.

*/

const COLUMN_INDEX_NAME = 0;

/**

* Index of the geometry column.

*/

const COLUMN_INDEX_GEOMETRY = 1;

/**

* Name of the neighborhoods' city.

*/

const CITY = 'Chicago';

/**

* Name of the neighborhoods' state.

*/

const STATE = 'IL';

/**

* Path of the seed file relative to the `database` directory.

*/

const DATABASE_FILE_PATH = 'seeds/flat-files/chicago-illinois.csv';

/**

* If the file has a header row.

*/

const HAS_HEADER_ROW = true;

/**

* If the neighborhood names should be converted to Title Case.

*/

const USE_TITLE_CASE = false;

/**

* Run the database seeds.

*

* @throws \Throwable

*/

public function run()

{

// resolve the path of the seed file

$file_path = database_path(self::DATABASE_FILE_PATH);

// seed the neighborhoods from the flat file

$this->seedFromFlatFile($file_path,

self::COLUMN_INDEX_NAME,

self::COLUMN_INDEX_GEOMETRY,

self::CITY,

self::STATE,

self::HAS_HEADER_ROW,

self::USE_TITLE_CASE);

}

/**

* Parses the geometry to a multipolygon from well-known text.

*

* @param mixed $geometry

* @return \Grimzy\LaravelMysqlSpatial\Types\MultiPolygon

*/

protected function parseGeometryToMultiPolygon($geometry): MultiPolygon

{

return MultiPolygon::fromWKT($geometry);

}

}

At the top of the file we have constants for the column indexes of the name and geometry data as well as constants for the neighborhood city, the neighborhood state, the file path relative to the database directory, whether or not the file has a header row, and whether or not the neighborhood names should be converted to Title Case.

Next we have the run method which is called by Laravel when executing the seeder. In this method we first resolve the path of the flat file using Laravel’s helper method database_path. Then we call the parent class’s method seedFromFlatFile using the file path and our constants as arguments.

Finally, we implement the parseGeometryToMultiPolygon method by using the fromWKT static method of Grimzy\LaravelMysqlSpatial\Types\Multipolygon to instantiate a new MultiPolygon and return it. Remember this method will be called by the base class during the execution of the seedFromFlatFile method.

Continuing with our seeders, use artisan to create BaltimoreMarylandSeeder.

$ php artisan make:seeder BaltimoreMarylandSeeder

Edit the file database/seeds/BaltimoreMarylandSeeder.php to match the contents below.

<?php

use Grimzy\LaravelMysqlSpatial\Types\MultiPolygon;

class BaltimoreMarylandSeeder extends BaseNeighborhoodSeeder

{

/**

* Index of the name column.

*/

const COLUMN_INDEX_NAME = 3;

/**

* Index of the geometry column.

*/

const COLUMN_INDEX_GEOMETRY = 1;

/**

* Name of the neighborhoods' city.

*/

const CITY = 'Baltimore';

/**

* Name of the neighborhoods' state.

*/

const STATE = 'MD';

/**

* Path of the seed file relative to the `database` directory.

*/

const DATABASE_FILE_PATH = 'seeds/flat-files/baltimore-maryland.csv';

/**

* If the file has a header row.

*/

const HAS_HEADER_ROW = true;

/**

* If the neighborhood names should be converted to Title Case.

*/

const USE_TITLE_CASE = false;

/**

* Run the database seeds.

*

* @throws \Throwable

*/

public function run()

{

// resolve the path of the seed file

$file_path = database_path(self::DATABASE_FILE_PATH);

// seed the neighborhoods from the flat file

$this->seedFromFlatFile($file_path,

self::COLUMN_INDEX_NAME,

self::COLUMN_INDEX_GEOMETRY,

self::CITY,

self::STATE,

self::HAS_HEADER_ROW,

self::USE_TITLE_CASE);

}

/**

* Parses the geometry to a multipolygon from well-known text.

*

* @param mixed $geometry

* @return \Grimzy\LaravelMysqlSpatial\Types\MultiPolygon

*/

protected function parseGeometryToMultiPolygon($geometry): MultiPolygon

{

return MultiPolygon::fromWKT($geometry);

}

}

Notice how simple it was to implement a new flat file seeder because we abstracted away the logic into our base class.

Again using artisan, create our last seeder EastBatonRougeParishSeeder.

$ php artisan make:seeder EastBatonRougeParishSeeder

Open up the file database/seeds/EastBatonRougeParishSeeder.php and edit the contents to match below.

<?php

use Grimzy\LaravelMysqlSpatial\Types\MultiPolygon;

use Grimzy\LaravelMysqlSpatial\Types\Polygon;

class EastBatonRougeParishSeeder extends BaseNeighborhoodSeeder

{

/**

* Index of the name column.

*/

const COLUMN_INDEX_NAME = 2;

/**

* Index of the geometry column.

*/

const COLUMN_INDEX_GEOMETRY = 1;

/**

* Name of the neighborhoods' city.

*/

const CITY = 'Baton Rouge';

/**

* Name of the neighborhoods' state.

*/

const STATE = 'LA';

/**

* Path of the seed file relative to the `database` directory.

*/

const DATABASE_FILE_PATH = 'seeds/flat-files/east-baton-rouge-parish-louisiana.csv';

/**

* If the file has a header row.

*/

const HAS_HEADER_ROW = true;

/**

* If the neighborhood names should be converted to Title Case.

*/

const USE_TITLE_CASE = true;

/**

* Run the database seeds.

*

* @throws \Throwable

*/

public function run()

{

// resolve the path of the seed file

$file_path = database_path(self::DATABASE_FILE_PATH);

// seed the neighborhoods from the flat file

$this->seedFromFlatFile($file_path,

self::COLUMN_INDEX_NAME,

self::COLUMN_INDEX_GEOMETRY,

self::CITY,

self::STATE,

self::HAS_HEADER_ROW,

self::USE_TITLE_CASE);

}

/**

* Parses the geometry to a multipolygon from well-known text.

*

* @param mixed $geometry

* @return \Grimzy\LaravelMysqlSpatial\Types\MultiPolygon

*/

protected function parseGeometryToMultiPolygon($geometry): MultiPolygon

{

// parse the well-known text into a polygon

$polygon = Polygon::fromWKT($geometry);

// return a multipolygon containing the polygon

return new MultiPolygon([$polygon]);

}

}

This time the implementation of the parseGeometryToMultiPolygon method is different. If you check the east-baton-rouge-parish-louisiana.csv file you’ll notice the WKT contains polygons instead of multipolygons, but the method calls for a MultiPolygon to be returned. Therefore we first parse the Polygon from WKT and then create and return a new MutliPolygon using an array containing the Polygon passed to the constructor.

Next we need to edit database/seeds/DatabaseSeeder.php to call each of our seeders. Update the file to match the contents below.

<?php

use Illuminate\Database\Seeder;

class DatabaseSeeder extends Seeder

{

/**

* Seed the application's database.

*

* @return void

*/

public function run()

{

$this->call([

ChicagoIllinoisNeighborhoodSeeder::class,

BaltimoreMarylandSeeder::class,

EastBatonRougeParishSeeder::class,

]);

}

}

Let’s quickly regenerate our autoload files using composer.

$ composer dump-autoload

Finally, let’s migrate and seed our database using an artisan command. This will create our neighborhoods table as well as seed all the neighborhoods from our flat files.

$ php artisan migrate --seed

Installing the Second Package

Use composer to require the toin0u/geocoder-laravel package we’ll be using to geocode addresses.

$ composer require "toin0u/geocoder-laravel"

While composer is running, this is a great time to get a Google API key for our project. We’ll be using Google’s Geocoding API.

- Go to the Google Cloud Console and log in using a Google account.

- Create a new project.

- Under the

APIs & Servicesdashboard, clickENABLE APIS AND SERVICES. - Enable the Geocoding API by searching for

Geocoding API, clicking on the result, and then clicking the button labeledENABLE. - Under

APIs & Servicesgo toCredentials. - Click

Createand selectAPI Key. - Copy the generated API key to your clipboard.

Now we’re going to add the necessary configuration for the geocoding package we just installed.

Edit the .env environment file at the root of our project, adding the key GOOGLE_MAPS_API_KEY and pasting in the value of your API key.

GOOGLE_MAPS_API_KEY=***************************************

For posterity’s sake let’s also add an entry in .env.example for the same key. Remember, don’t add your API key here; this file is only a template and is checked into source control.

GOOGLE_MAPS_API_KEY=

The Home Controller

Now let’s define our routes by editing routes/web.php, deleting the existing welcome route, and replacing it with the routes below.

Route::get('/', 'HomeController@show')->name('home.show');

Route::post('/', 'HomeController@submit')->name('home.submit');

The first route, named home.show, is for displaying the home page. The second route, named home.submit, will handle the submission of addresses and return a response containing the result of the search.

Next, create a HomeController using artisan.

$ php artisan make:controller HomeController

Edit app/Http/Controllers/HomeController.php to match the contents below.

<?php

namespace App\Http\Controllers;

use App\Models\Neighborhood;

use Grimzy\LaravelMysqlSpatial\Types\Point;

use Illuminate\Http\Request;

class HomeController extends Controller

{

/**

* The session key for storing the success message.

*/

const SESSION_KEY_SUCCESS = 'success';

/**

* The session key for storing the error message.

*/

const SESSION_KEY_ERROR = 'error';

/**

* The result message for an address that could not be geocoded.

*/

const RESULT_BAD_ADDRESS = 'Failed to find a location for that address!';

/**

* The result message for an address that does not fall in any exiting Neighborhood's geometry.

*/

const RESULT_NO_RESULTS = 'No results for that address!';

/**

* The result message prefix for a found Neighborhood.

*/

const RESULT_NEIGHBORHOOD_PREFIX = 'That address is in ';

/**

* The route name for showing the home page.

*/

const ROUTE_NAME_SHOW_HOME = 'home.show';

/**

* Shows the home page.

*

* @return \Illuminate\View\View

*/

public function show()

{

return view('home');

}

/**

* Handles submission of an address and returns a redirect to the home page with success or error message.

*

* @param \Illuminate\Http\Request $request

* @return \Illuminate\Http\RedirectResponse

*/

public function submit(Request $request)

{

// validate the request

$this->validate($request, [

'address' => 'required',

]);

// get the given address from the request

$address = $request->input('address');

// make the geocoder

$geocoder = app('geocoder');

// geocode the address and get the first result

$result = $geocoder->geocode($address)->get()->first();

// if a result couldn't be found, redirect to the home page with a result message flashed to the session

if (! $result) {

return redirect(route(self::ROUTE_NAME_SHOW_HOME))->with(self::SESSION_KEY_ERROR, self::RESULT_BAD_ADDRESS);

}

// get the coordinates of the geocoding result

$coordinates = $result->getCoordinates();

// get the latitude of the coordinates

$lat = $coordinates->getLatitude();

// get the longitude of the coordinates

$lng = $coordinates->getLongitude();

// create a new point using the coordinates

$point = new Point($lat, $lng);

// get the first Neighborhood that has geometry containing the point

$neighborhood = Neighborhood::contains('geometry', $point)->first();

// if a Neighborhood couldn't be found, redirect to the home page with a result message flashed to the session

if (! $neighborhood) {

return redirect(route(self::ROUTE_NAME_SHOW_HOME))->with(self::SESSION_KEY_ERROR, self::RESULT_NO_RESULTS);

}

// format the result message for the found Neighborhood

$message = $this->formatNeighborhoodResult($neighborhood);

// redirect to the home page with the result message flashed to the session

return redirect(route(self::ROUTE_NAME_SHOW_HOME))->with(self::SESSION_KEY_SUCCESS, $message);

}

/**

* Format the result message for a found neighborhood.

*

* @param \App\Models\Neighborhood $neighborhood

* @return string

*/

private function formatNeighborhoodResult(Neighborhood $neighborhood) {

return self::RESULT_NEIGHBORHOOD_PREFIX . $neighborhood->name . ', ' . $neighborhood->city . ', ' . $neighborhood->state . '.';

}

}

In this file we first define constants for the session key storing success messages, the session key storing error messages, the text for result messages, and the home.show route name.

In the show method we simply return the view named home using the Laravel helper method view.

The submit method accepts an argument of type Illuminate\Http\Request called $request. Laravel will automatically inject this variable containing the current request data.

First we validate the request by specifying a rule for address making the field required. The validated address is then retrieved using the input method on the $request variable. We use Laravel’s app helper method which uses the service container to resolve an instance of the geocoder. Using method chaining we geocode the given address and get the first result. If a result couldn’t be found for the given address we redirect the user to the home page with an error message flashed to the session.

Next we get the longitude and latitude coordinates from the result and create a new Grimzy\LaravelMysqlSpatial\Types\Point instance by passing the coordinates into the constructor.

The eloquent query scope contains provided by the grimzy/laravel-mysql-spaital package is then used to scope the query by records with a geometry containing the point. We use the first method to get the first result. This will generate a query along the lines of:

SELECT * FROM `neighborhoods` WHERE ST_Contains(`geometry`, ST_GeomFromText('POINT(0 0)')) LIMIT 1

In this case 'POINT(0 0)' is the WKT representation of our longitude and latitude (which won’t actually be 0, 0 unless our user lives in the middle of the ocean).

Notice that we are using MySQL to calculate if the the geometry contains the point. This is much faster than if we had chunked through all the records and had done the calculation in PHP.

Finally, if a resulting neighborhood containing the point couldn’t be found we return a redirect to the home page with an error message flashed to the session. Otherwise, we format the neighborhood name, city, and state into a success result message (using the formatNeighborhoodResult method) and return a redirect to the home page with the message flashed to the session.

The Home View

Rename the blade template at resources/views/welcome.blade.php to resources/views/home.blade.php and open the file.

Under the <!-- Styles --> comment, add a link to Bootstrap’s style sheet.

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/4.0.0-alpha.6/css/bootstrap.min.css" integrity="sha384-rwoIResjU2yc3z8GV/NPeZWAv56rSmLldC3R/AZzGRnGxQQKnKkoFVhFQhNUwEyJ" crossorigin="anonymous">

Next, in the .title class definition, change the font size to something smaller.

.title {

font-size: 64px;

}

Just before the closing </body> tag, add script tags for Bootstrap’s dependencies jQuery, Popper.js, and Bootstrap’s minified JavaScript file.

<script src="https://code.jquery.com/jquery-3.1.1.slim.min.js" integrity="sha384-A7FZj7v+d/sdmMqp/nOQwliLvUsJfDHW+k9Omg/a/EheAdgtzNs3hpfag6Ed950n" crossorigin="anonymous"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/tether/1.4.0/js/tether.min.js" integrity="sha384-DztdAPBWPRXSA/3eYEEUWrWCy7G5KFbe8fFjk5JAIxUYHKkDx6Qin1DkWx51bBrb" crossorigin="anonymous"></script>

<script src="https://maxcdn.bootstrapcdn.com/bootstrap/4.0.0-alpha.6/js/bootstrap.min.js" integrity="sha384-vBWWzlZJ8ea9aCX4pEW3rVHjgjt7zpkNpZk+02D9phzyeVkE+jo0ieGizqPLForn" crossorigin="anonymous"></script>

Because our application has no authentication, remove the entire @if (Route::has(‘login’)) directive including the contents and closing directive.

Finally, edit the contents of the <div class="content"> div to match below.

<div class="content">

<div class="title m-b-md">

Neighborhood Finder

</div>

@if (session('success'))

<div class="alert alert-success">

{{ session('success') }}

</div>

@elseif (session('error'))

<div class="alert alert-danger">

{{ session('error') }}

</div>

@endif

<div class="col-12 text-left">

<form class="form-horizontal" method="POST" action="{{ route('home.submit') }}">

@csrf

<div class="form-group{{ $errors->has('address') ? ' has-error' : '' }}">

<label for="address" class="control-label">Enter an address</label>

<input id="address" name="address" type="text" class="form-control" required autofocus>

@if ($errors->has('address'))

<span class="help-block">

<strong>{{ $errors->first('address') }}</strong>

</span>

@endif

</div>

<div class="form-group text-right">

<button type="submit" class="btn btn-primary">

Submit

</button>

</div>

</form>

</div>

</div>

Here we use the blade directive @if to check if there’s a message in the success key of the current session. If there is one, we display an alert to the user containing the message. If there isn’t one, we use the blade directive @elseif to check if there’s a message in the error key of the current session, again displaying it to the user if it exists.

Next we define a form with an action specifying our submission route using the route helper method. The @crsf blade directive is used to generate a Cross-Site Request Forgery field and token. If the $errors message bag contains an entry for address we add the has-error class to the form group div and display the error in a help block.

Conclusion

That’s it! Open the project in your browser and try out some different addresses! The address will be geocoded and a neighborhood will be returned if any neighborhoods in the database have a boundary containing the address’s coordinates. If no address coordinates or neighborhood could be found, an error message stating such will be returned.

Try some of these addresses:

1558 N Damen Ave, Chicago, IL 606221001 E Fayette St, Baltimore, MD 21202201 Community College Dr, Baton Rouge, LA 70806123 Fake St, NowhereYour Address

Additional Resources

For additional data sources I encourage you to check out this Forbes article as well as Open Data Inception.

You can view the source code for this project on GitHub.

KRACK is Back … Meet KrØØk!

If you don’t already know, WPA-2 is not a good Wi-fi standard. Basically it can be breached through the flawed four way handshake. But now it gets even worse, and where ESET Research have published a white paper defining KrØØk (CVE-2019–15126), and which is based on the previously discovered KRACK vulnerability. Within this the researchers manage to force a device to use a “All Zeros” key, and thus decrypt all the packets sent from the device:

The research has shown that two of the most popular wifi chipset manufacters — Broadcom and Crypress — are vulnerabily to thus, which could mean that millions (or even billions) or devices are exposed to the vulnerability. These chipers are used in wifi routers and access points, and affects both home-based wifi (WPA-2 Personal) and enterprise networks (WPA-2 Enterprise).

KRACK

It’s a well-know secret, but the WPA-2 handshaking method used in your home Wi-fi system is flawed. The authentication method used is defined as WPA2-Personal, and that we need to migrate to something which is more secure. While your corporate wi-fi network (and which uses WPA3-Enterprise) is fairly bullet proof, the 4-way handshake method used on WPA2-Personal networks can be cracked with a single capture of a packet [details]:

And so, on 16 October 2017, the weakness of the four-way handshake was further highlighted with KRACK (Key Reinstallation Attacks) vulnerability [paper]:

US-CERT has become aware of several key management vulnerabilities in the 4-way handshake of the Wi-Fi Protected Access II (WPA2) security protocol. The impact of exploiting these vulnerabilities includes decryption, packet replay, TCP connection hijacking, HTTP content injection, and others. Note that as protocol-level issues, most or all correct implementations of the standard will be affected. The CERT/CC and the reporting researcher KU Leuven, will be publicly disclosing these vulnerabilities on 16 October 2017.

Within the four-way handshake, in the third step, the protocol allows for the key to be sent many times. On resending, the nonce is reused, and which compromises the handshaking, and cracks the crypto.

Meet the Simultaneous Authentication of Equals (aka Dragonfly)

And so the Simultaneous Authentication of Equals(SAE, and also typically known as Dragonfly) method has been introduced in WPA-3 Personal and which replaces with 4-way handshake with a zero-knowledge proof method (see theory later on in the article). Unfortunately the same team that defined the KRACK vulnerability has found new weaknesses in its setup [here].

Within their research, the research team found that they could recover the password used for the network, and thus steal sensitive information from the communications. These attacks were:

- Downgrade attack. This involves reducing the security level used by the protocol, and relates to the backwards comparability with WPA-2. With this we get WPA3-Transistion, and which uses the WPA-2 method to connect to the access point, and thus steal the password.

- Three side channel attacks. One of these attacks use a security group which uses a prime number of its modulo function. The attacker can then observe how long the access point takes to compute a password, and then narrow the range of possible passwords used. Another two side-channel attacks involved investigating the running memory, and where a dictionary attack could be used to analyse the patterns created. The research team estimated that they could brute-force all 8-character lowercase passwords for $125 on Amazon EC2 instances.

- Denial of service (DoS) attack. This involves side-stepping the WPA3 DoS method for detecting fake MAC addresses, and where the research team managed to bring the network down with just 16 forged connection attempts per second.

The full details of the vulnerabilities are defined here:

- CERT ID #VU871675: Downgrade attack against WPA3-Transtition mode leading to dictionary attacks.

- CERT ID #VU871675: Security group downgrade attack against WPA3’s Dragonfly handshake.

- CVE-2019–9494: Timing-based side-channel attack against WPA3’s Dragonfly handshake.

- CVE-2019–9494: Cache-based side-channel attack against WPA3’s Dragonfly handshake.

- CERT ID #VU871675: Resource consumption attack (i.e. denial of service) against WPA3’s Dragonfly handshake.

While all of these relate to WPA-3 Personal, the research team also found a bug in the EAP-PWD protocol, and where an intruder could impersonate a user on a network, without knowing their password. This bug affects WPA-3 Enterprise, as it focuses on RADIUS integration. Details have not been released at the current time on this.

Dragonfly

It has been known for a while that the core of Wi-fi — WPA-2 (IEEE 802.11i) — is weak in its implementation of security. While its cryptography is sound — using AES encryption — its main weakness focuses on the flawed 4-way handshake.

Within this, the client and access point pass a hashed value of the SSID and the password, and this can be seen by an intruder. Its saving grace is that the hashing method used — PBKDF2 — is a relatively slow method, and when it was initially rolled-out it would have been relatively expensive to crack it. Unfortunately, these days, cloud crackers can now run at high speeds, and even PBKDF2 can be cracked within reasonable time limits and for a minimal cost.

The great worry, though, is that once it is cracked, it is cracked for the whole network. With 16-GPU clusters available to rent within AWS for less than $10 per hour, the opportunity exists for the core of wi-fi to fundamentally fail within the next few years.

And so, the Wi-Fi Alliance has addressed this fundamental problem with the release of WPA-3, and where the 4-way handshake is replaced by a zero-knowledge proof method named Simultaneous Authentication of Equals (SAE). Its roots derive from mesh networks and of the Dragonfly protocol, and have since been defined in IEEE 802.11s.

SAE

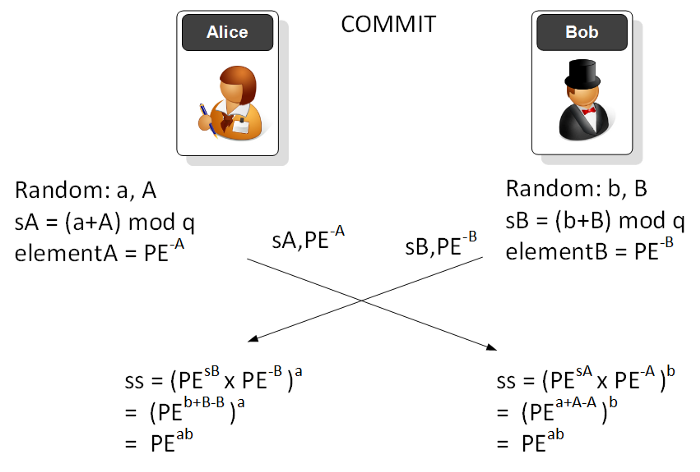

The focus for SAE is to properly authenticate a device onto a network and using a password and MAC addresses to authenticate. An intruder should not be able to connect to a network unless they known password that the access point uses. Also, an intruder should not be able to guess either the password or the long-term authentication element of the password. In WPA-2 an intruder can listen to the 4-way handshake and can then brute force the password from hashed value created (see the section below for details).